Responsible AI at Risk: Understanding and Overcoming the Risks of Third-Party AI

A panel of experts weighs in on whether responsible AI programs effectively address externally sourced AI tools.

Topics

Responsible AI

In collaboration with

BCGFor the second consecutive year, MIT Sloan Management Review and Boston Consulting Group (BCG) have assembled an international panel of AI experts that includes academics and practitioners to help us gain insights into how responsible artificial intelligence (RAI) is being implemented in organizations worldwide.

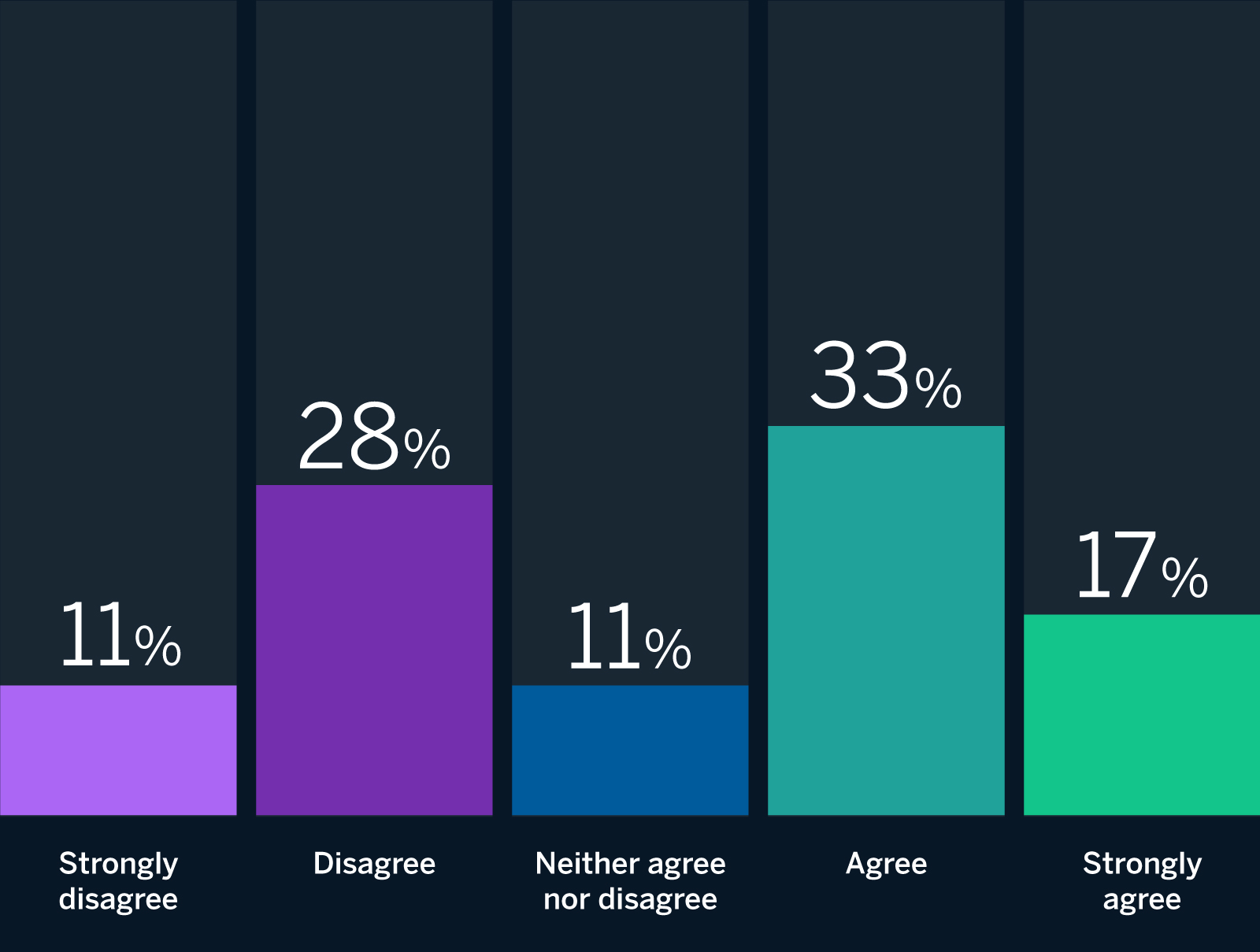

Last year, we published the report “To Be a Responsible AI Leader, Focus on Being Responsible.” This year, we’re examining the extent to which organizations are addressing risks stemming from the use of internally and externally developed AI tools. To kick things off, we asked our panelists to react to what we thought would be a straightforward provocation: RAI programs effectively address the risks of using or integrating third-party AI tools. Although half of our panelists agree or strongly agree with the statement, their interpretations of it varied widely. Some panelists focused on what organizations do in practice, while others described what they ought to do in principle.

Overall, there is broad agreement that RAI programs should address the risks of such tools. However, the extent to which they actually do address them in practice is another matter — one that we will dive into more deeply with our research this year. Below, we share insights from our panelists and draw on our own observations and experience working on RAI initiatives to offer recommendations on how organizations might address the risks of third-party AI tools through their RAI programs.

The Panelists Respond

RAI programs effectively address the risks of third-party AI tools.

There is a gap between whether organizations’ RAI programs address these risks and whether they should.

Source: Responsible AI panel of 18 experts in artificial intelligence strategy.

Third-Party Risks on the Rise

Our experts broadly agree that RAI programs should address the growing risks associated with the use or integration of third-party AI tools. Stanford CodeX fellow Riyanka Roy Choudhury argues that it is a core conceptual component of an effective RAI program. “One of the main principles and focus of RAI programs is to mitigate the risks of integrating third-party AI tools,” she says. For Nitzan Mekel-Bobrov, eBay’s chief AI officer, it is also a practical necessity. “Third-party AI tools, including open-source models, vendor platforms, and commercial APIs, have become an essential part of virtually every organization’s AI strategy in one form or another, so much so that it is often difficult to disentangle the internal components from the external ones,” he explains. “Consequently, an AI program needs to include policies on the use of third-party tools, evaluation criteria, and the necessary guardrails.”

And that practical necessity is growing. For example, Richard Benjamins, chief AI and data strategist at Telefónica, says, “AI as a service is a trend, and thus increasingly more organizations will use AI tools in the cloud that were built by others.” Citing the European Union’s AI Act and other regulations, he argues that “it is therefore of utmost importance for responsible AI programs to consider the full AI value chain, in addition to in-house AI developments.”

Despite broad normative agreement that RAI programs should address these types of risks, there is a lack of consensus as to how they should do so. Oarabile Mudongo, a policy specialist at the Africa AI Observatory, says, “To effectively address the risks associated with third-party AI tools, RAI programs should include a comprehensive set of policies and procedures, such as guidelines for ethical AI development, risk assessment frameworks, and monitoring and auditing protocols.” And Radhika Alla, vice chair of digital platforms at the Mayo Clinic, asserts that “the same RAI rigor used to develop in-house AI models should be applied to evaluating third-party AI platforms and services.”

But Belona Sonna, a Ph.D. candidate in Australian National University’s Humanising Machine Intelligence program, disagrees, arguing that “RAI programs alone cannot effectively address the risks associated with the use or integration of third-party AI tools.” Rather, she recommends implementing “a related risk management system for third-party tools that will serve to ensure perfect cohesions between the two entities according to RAI principles.” H&M’s head of responsible AI, Linda Leopold, agrees that assessing the risks of third-party AI tools “requires a different set of methods than internally built AI systems do.”

Disagree

The Practical Challenges of Risk Management

UNICEF’s digital policy specialist, Steven Vosloo, says the extent to which an organization’s RAI program addresses third-party AI risks in practice depends on the rigor of its program, and he acknowledges that “determining how to fully assess the risks (real or potential) in third-party AI tools can be challenging.”

For David R. Hardoon, chief data and AI officer at UnionBank of the Philippines, the extent to which an RAI program addresses the risks of third-party AI tools is a question of how comprehensive it is. As he explains, “The considerations of a comprehensive responsible AI program would be agnostic with regard to the platform used for development,” but in practice RAI programs often fail to “extend their risk assessments to system-related risks, and therefore they would not necessarily cover the respective integration risks that may exist when dealing with third-party AI tools.”

Agree

“An important gap remains at the procurement phase … where, whether due to resource and expertise constraints or the absence of enabling RAI policies, many companies simply do not conduct tailored assessments of the third-party AI solutions they are buying — including the models themselves. As AI innovation accelerates — particularly with the adoption of complex models like large language models and generative AI, which remain challenging to evaluate from a technical standpoint — the need for RAI programs to develop robust third-party risk procurement policies that include AI model assessments is more critical than ever.”

Simon Chesterman, senior director of AI governance at AI Singapore, says that one of the biggest challenges is “that we don’t know what we don’t know.” In fact, the rapid adoption of generative AI and other tools is increasing the risks posed by so-called shadow AI (uses the organization may be entirely unaware of).

Another challenge is the lack of industry standards. As Responsible AI Institute executive director Ashley Casovan explains, “Due to a lack of maturity in what RAI programming across organizations and industry actually means, there is yet to be an industry best practice or standard for integrating third-party tools.” Similarly, Tshilidzi Marwala, rector of United Nations University, argues that certifying third-party AI tools as responsible “will require minimum standards that do not currently exist.” Consequently, procurement and post-procurement policies are key. As Alka Patel, former U.S. Department of Defense chief of responsible AI, notes, “An organization needs to build in mechanisms that allow it to continue to evaluate, update, and integrate the third-party RAI tool post-procurement.”

Staying Vigilant Amid Change

In fact, effective risk mitigation requires a continual, iterative approach to RAI. As Mudongo explains, “A proactive approach to managing the risks of third-party AI tools could include ongoing monitoring of AI solutions and regular updates to RAI programs as new risks emerge.” Similarly, Alla asserts that “RAI programs and frameworks need continuous oversight and governance to ensure that the key principles of RAI are applicable and relevant to evaluating third-party AI tools and are augmented where needed.” Giuseppe Manai, cofounder, chief operating officer, and chief data security officer at Stemly, agrees that “programs must be flexible and dynamic to keep pace with the evolution of AI tools.”

Paula Goldman, chief ethical and humane use officer at Salesforce, contends that “this is especially important now, as businesses race to announce new partnerships and invest in startups to help them bring generative AI to market.”

Recommendations

For organizations seeking to address the risks of third-party AI tools through their RAI efforts, we recommend the following:

- Minimize shadow AI. It is easier to manage what you can measure. In order to effectively mitigate the risks of third-party AI tools, organizations need a clear view and inventory of uses of AI within their operations.

- Ensure that third-party risk mitigation is part of your RAI program. Full awareness of AI is not enough. In order to effectively detect and mitigate third-party risks, your RAI program should extend to all uses of AI across the organization, whether internally or externally built or developed, including through any relevant procurement and post-procurement policies.

- Continually update and iterate to address new risks. As with all other components of RAI, third-party risk mitigation is not a one-off exercise. Whether due to advancements in AI or legal and regulatory developments, plan to regularly revisit your approach to third-party risk mitigation in your RAI program.

“Responsible AI programs should cover both internally built and third-party AI tools. The same ethical principles must apply, no matter where the AI system comes from. Ultimately, if something were to go wrong, it wouldn’t matter to the person being negatively affected if the tool was built or bought. However, from my experience, responsible AI programs tend to focus primarily on AI tools developed by the organization itself.”