Mature RAI Programs Can Help Minimize AI System Failures

Topics

Responsible AI

In collaboration with

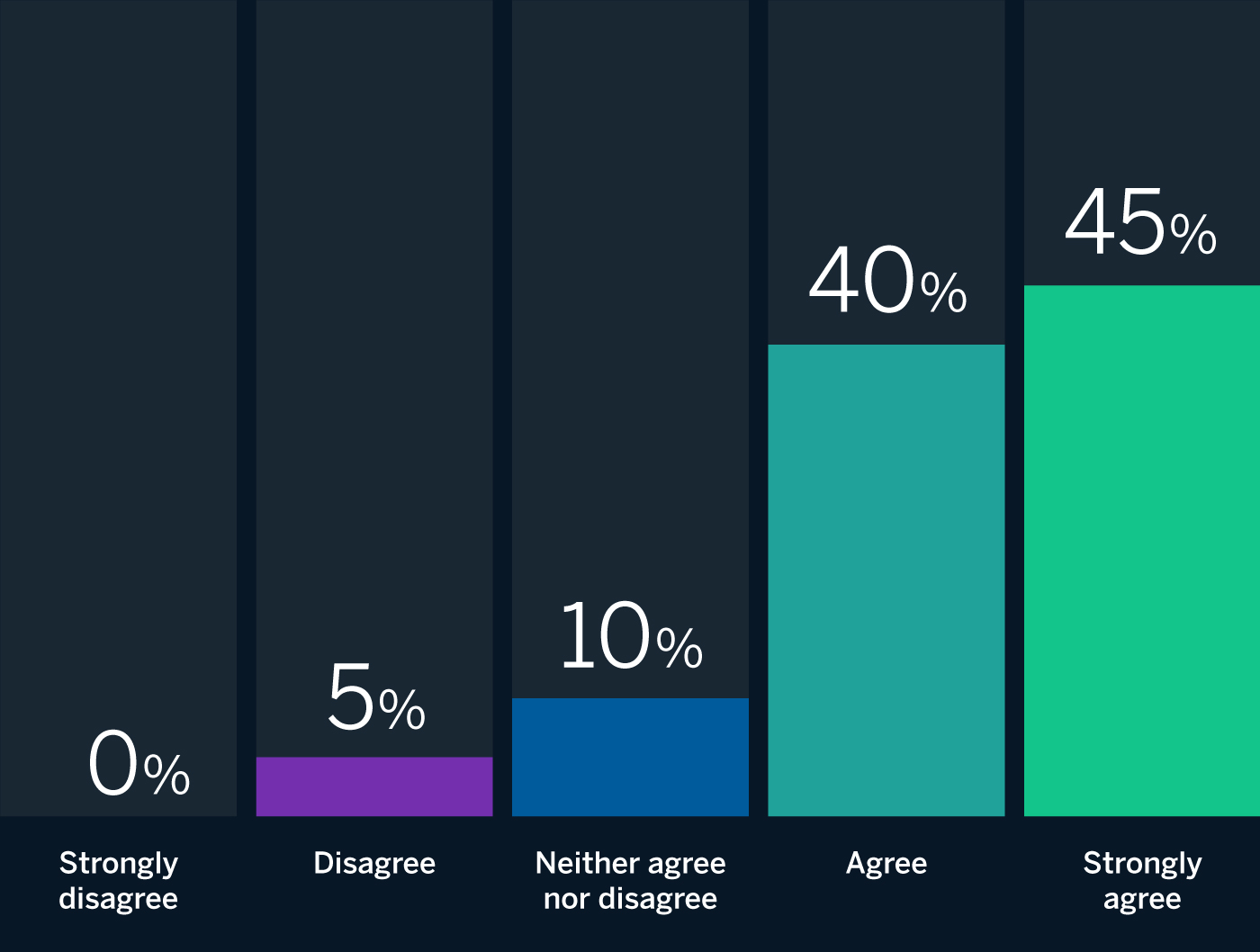

BCGMIT Sloan Management Review and Boston Consulting Group have assembled an international panel of AI experts that includes academics and practitioners to help us gain insights into how responsible artificial intelligence (RAI) is being implemented in organizations worldwide. In our global survey, 70% of respondents acknowledged having had at least one AI system failure to date. This month, we asked our panelists to react to the following provocation on the topic: Mature RAI programs minimize AI system failures. The respondents overwhelmingly supported this thesis, with 85% (17 out of 20) either agreeing or strongly agreeing with it. That said, even among those who agree that mature RAI helps minimize AI system failures, they caution that it is not by itself a silver bullet. Other factors play a role as well, including how “failure” and “mature RAI” are defined, governance standards, and a host of technological, social, and environmental considerations. Below, we share insights from our panelists and draw on our own observations and experience working on RAI initiatives to offer recommendations for organizations seeking to minimize AI system failures through their RAI efforts.

The Panelists Respond

Mature RAI programs minimize AI system failures

Eighty-five percent of our expert panelists agree.

Source: Responsible AI panel of 20 experts in artificial intelligence strategy.

Putting Maturity and Failure in Context

For many experts, the extent to which mature RAI programs can minimize AI system failures depends on what we mean by a “mature RAI program” and “failure.” Some failures are straightforward and easy to detect. For example, one of the most visible categories of AI failure involves bias. As Salesforce’s chief ethical and humane use officer Paula Goldman observes, “Some of AI’s greatest failures to date have been the product of bias, whether it’s recruiting tools that favor men over women or facial recognition programs that misidentify people of color. Embedding ethics and inclusion in the design and delivery of AI not only helps to mitigate bias — it also helps to increase the accuracy and relevancy of our models, and to increase their performance in all kinds of situations once deployed.” Mature RAI programs are designed to preempt, detect, and rectify bias and other visible challenges with AI systems.

Strongly agree

But as AI systems grow more complex, failures can grow harder to pinpoint and more difficult to address. As Nitzan Mekel-Bobrov, eBay’s chief AI officer, explains, “AI systems are often the product of many different components, inputs, and outputs, resulting in a fragmentation of decision-making across agents. So, when failures occur, it is difficult to ascertain the precise reason and point of failure. A mature responsible AI framework accounts for these challenges by incorporating into the system development life cycle end-to-end tracking and measurement, governance over the role played by each component, and integration into the final decision output, and it provides the enabling capabilities for preventing AI system failures ahead of the technology’s decision-making.”

To tackle more and less visible failures, a mature RAI program should be broad in scale and/or scope, addressing the full life cycle of an AI system and its potential for negative outcomes. As Linda Leopold, head of responsible AI and data at H&M, argues, “For a responsible AI program to be considered mature, it should be both comprehensive and widely adopted across an organization. It has to cover several dimensions of responsibility, including fairness, transparency, accountability, security, privacy, robustness, and human agency. And it has to be implemented on both a strategic (policy) and operational level.” She adds, “If it ticks all these boxes, it should prevent a wide range of potential AI system failures.” Similarly, David Hardoon, chief data and AI officer at UnionBank of the Philippines, explains, “A mature RAI program should cover the breadth of AI in terms of data and modeling for both development and operationalization, thus minimizing potential AI system failures.”

In fact, the most mature RAI programs are embedded in an organization’s culture, leading to better outcomes. As Slawek Kierner, senior vice president of data, platforms, and machine learning at Intuitive, explains, “Mature responsible AI programs work on many levels to increase the robustness of resulting solutions that include AI components. This starts with the influence RAI has on the culture of data science and engineering teams, provides executive oversight and clear accountability for every step of the process, and on the technical side ensures that algorithms as well as data used for training and predictions are audited and monitored for drift or abnormal behavior. All of these steps greatly increase the robustness of the whole AI DevOps process and minimize risks of AI system failures.”

The Role of Emerging Standards

Some experts caution that without formal or widely adopted RAI standards, the impact of mature RAI programs on AI system failures is harder to establish. The Responsible AI Institute’s executive director, Ashley Casovan, argues, “In the absence of a globally adopted definition or standard framework for what responsible AI involves, the answer should really be ‘It depends.’” According to Oarabile Mudongo, a researcher at the Center for AI and Digital Policy in Johannesburg, such standards are on their way: “Companies are becoming more involved in shaping AI-related legislation and engaging with regulators at the country level. Regulators are taking notice as well, lobbying for AI regulatory frameworks that include enhanced data safeguards, governance, and accountability mechanisms. Mature RAI programs and regulations based on these standards will not only assure the safe, resilient, and ethical use of AI but also help minimize AI system failure.”

Philip Dawson, head of policy at Armilla AI, cautions that a superficial approach to AI testing across industry has also meant that most AI projects either fail in development or risk contributing to real-world harms after they are released. “To reduce failures,” he notes, “mature RAI programs must take a comprehensive approach to AI testing and validation.” Jaya Kolhatkar, chief data officer at Hulu, agrees that “to run an RAI program correctly, there needs to be a mechanism that catches failures faster and a strong quality assurance program to minimize overall issues.” In sum, standards for AI testing and quality assurance would help organizations evaluate the extent to which RAI programs minimize AI system failures.

Acknowledging Maturity’s Limitations

Even with the most mature RAI programs in place, failures can still occur. As Aisha Naseer, director of research at Huawei Technologies (UK), explains, “The maturity level of RAI programs plays a crucial role in contemplating AI system failures; however, there is no guarantee that such calamities will not happen. This is due to multiple potential causes of AI system failures, including environmental constraints and contextual factors.”

Likewise, Boeing’s vice president and chief engineer for sustainability and future mobility, Brian Yutko, notes, “RAI programs may eliminate some types of unintended behaviors from certain learned models, but overall success or failure at automating a function within a system will be determined by other factors.” Steven Vosloo, digital policy specialist in UNICEF’s Office of Global Insight and Policy, similarly argues that RAI programs can reduce but not minimize AI system failures: “Even if developed responsibly and not to do harm, AI systems can still fail. This could be due to limitations in algorithmic models, poorly scoped system goals, or problems with integration with other systems.” In other words, RAI is just one of several potential factors that affect how AI systems function, and sometimes fail, in the real world.

Agree

“Responsible AI programs consider in advance the potential negative side effects of the use of AI by ‘forcing’ teams to think about relevant general questions. This facilitates the consideration and detection of failures of the AI system that our societies want to avoid. However, detecting such potential failures alone does not necessarily avoid them, as it requires proper action of the organization. Organizations that have mature RAI programs are likely to act properly, but it is not a guarantee, especially when the ‘failure’ is beneficial for the business model.”

Recommendations

A mature RAI program goes a long way toward minimizing or at least reducing AI system failures, since a mature RAI program is broad in scale and/or scope and addresses the full life cycle of an AI system, including its design and, critically, its deployment in the real world. Still, the absence of formal or widely adopted RAI standards can make it hard to assess the extent to which an RAI program mitigates such failures. In particular, there is a need for better testing and quality assurance frameworks. But even with the most mature RAI program in place, AI system failures can still happen.

In sum, organizations hoping that their RAI efforts will help reduce or minimize AI system failures should focus on the following:

- Ensure the maturity of your RAI program. Mature RAI is broad in scale and/or scope, addressing a wide array of substantive areas and spanning wide swaths of the organization. The more mature your program, the likelier it is that your efforts will prevent potential failures and mitigate the effects of any that do occur.

- Embrace standards. RAI standardization, whether through new legislation or industry codes, is a good thing. Embrace these standards, including those around testing and quality assurance, as a way to ensure that your efforts are helping to minimize AI system failures.

- Let go of perfection. Even with the most mature RAI program in place, AI system failures can happen due to other factors. That’s OK. The goal is to minimize AI system failures, not necessarily eliminate them. Turn failures into opportunities to learn and grow.

“AI systems can fail or succeed, and any successful AI system must be ethically responsible. The idea that engineering and ethics can be separated in certain ways should be rejected. Saying, ‘This system is itself successful but only ethically fails,’ is a contradiction in terms. That’s similar to saying, ‘He is a great person but just harms others.’ If an AI system is really successful, it must be ethically mature. Hence, if it is not ethically mature, it is not a successful AI.”